Bir Ana Bilgisayar, Görsel Bir Sistemde Kaç Endüstri Kamerası Destekleyebilir?

Modern görsel sistemlerde, tek bir ana bilgisayar (örneğin, bir bilgisayar veya sunucu) kaç kamerayı destekleyebileceğini belirlemek, sistem tasarımı, ölçeklenebilirlik ve maliyet optimizasyonu için kritik bir sorudur. Cevap, donanım yetenekleri, yazılım verimliliği, endüstriyel kamera özellikler ve uygulama gereksinimleri gibi birden fazla bağımlı faktöre bağlıdır. Bu makale, bu temel değişkenleri inceler ve bir görsel sistemde kamera kapasitesini tahmin etmek için bir çerçeve sunar.

1. Donanım Bileşenleri ve Etkileri

Ana bilgisayarın donanımı, kamera desteğinin temelidir ve iki ana unsura büyük ölçüde bağlıdır.

1.1 İşlem Birimleri: CPU ve GPU

CPU, temel filtreleme'den karmaşık makine öğrenimi çıkarımlarına kadar geniş bir yelpazede görüntü işleme görevi üstlenir. Yüksek çözünürlüklü veya yüksek kare hızlı kameralar büyük veri hacimleri oluşturur ve bu da CPU'yu zorlar. Intel i9 veya AMD Threadripper gibi çok çekirdekli CPU'lar, görevleri çekirdekler arasında dağıtarak paralel işlem için kullanabilir. Öte yandan, GPU'lar, özellikle 3B görüş ve otonom sürüşteki derin öğrenme gibi görevler için kritik olan paralel hesaplama hızlandırması ile görsel sistemleri devrimINE sebep olur. NVIDIA GPU'larındaki CUDA gibi GPU-iyileştirilmiş işlem hatlarıyla entegre edilen kameralar, işleme yükünü CPU'dan alarak desteklenebilecek kamera sayısını potansiyel olarak üç katına çıkartır.

1.2 Bellek, Depolama ve G/Ç

Yeterli RAM, video akışlarını ve işlenen verileri tamponlamak için temel bir öneme sahiptir. 30 FPS'de çalışan bir 4K kamera yaklaşık 300 MB/s sıkıştırılmamış veri üretir, bu da çok-kamera yapılandırmalarında bellek gereksinimlerini artırır. Yüksek çözünürlüklü kameralar için her kameraya en az 4–8 GB RAM tahsis etmek gerekir. NVMe SSD'ler gibi yüksek hızlı depolama ve USB 3.2 ve PCIe gibi güçlü I/O arabirimleri, veri alımı ve depolama için hayati öneme sahiptir. Eski arabirimler sistemin ölçeklenebilirliğini ciddi şekilde sınırlayabilir.

2. Endüstriyel Kamera Özellikleri

Sanayi kameraları parametreleri, ana sistem üzerindeki yükü doğrudan etkiler ve bunu şu iki kritik faktör aracılığıyla yapar.

2.1 Çözünürlük ve Kare Hızı

Daha yüksek çözünürlük ve kare hızı, daha fazla veri işleme anlamına gelir. Bir 4K kamera, 1080p kameradan dört kat daha fazla piksel üretir ve bu da işlem gereksinimlerini önemli ölçüde artırır. Benzer şekilde, bir 120 FPS kamera, 30 FPS'lik bir kameradan dört kat daha fazla veri oluşturur. Spor yayınlarında, yüksek çözünürlüklü ve yüksek kare hızlı kameralar kullanılır ancak bu, kalite kaybını önlemek için güçlü donanıma ihtiyaç duyan sunucuda çok yüksek bir yük yaratır.

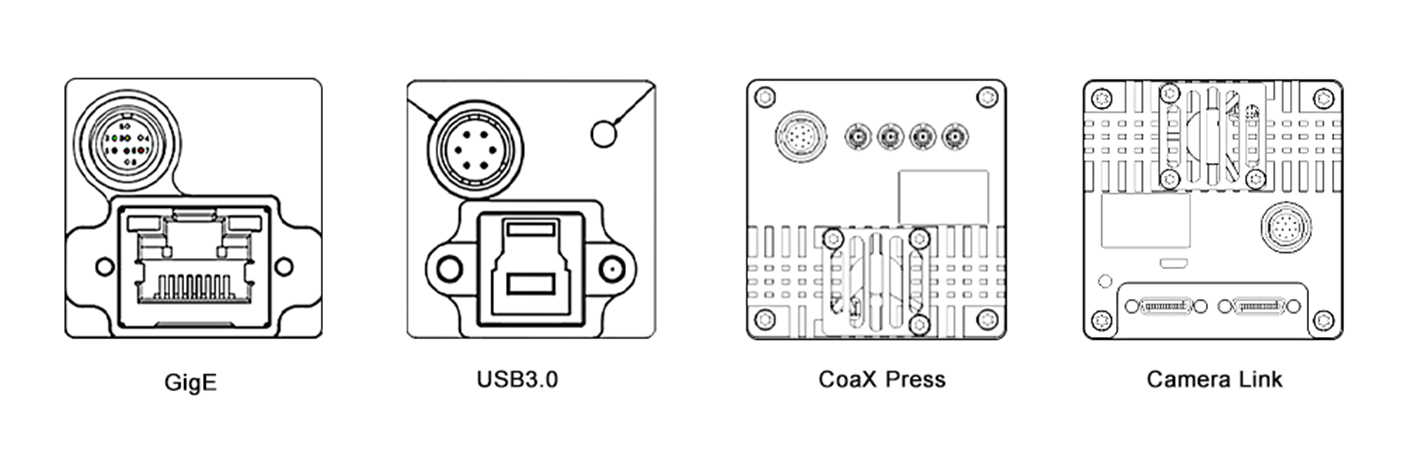

2.2 Sıkıştırma ve Arayüz

Sıkıştırma formatı seçiminin etkisi, veri boyutunu ve işleme maliyetini belirler. H.264 gibi sıkıştırılmış formatlar bant genişliğini azaltır ancak sunucuda kod çözme gerektirir. Sıkıştırılmamış formatlar daha yüksek sadakat sağlar ancak daha fazla kaynak tüketir. Ayrıca, kamera arayüzü türü de çok kritik bir faktördür. GigE Vision ve CoaXPress gibi yüksek hızlı arayüzler, çoklu kamera kurulumları için veri transferini etkin hale getirirken, USB 2.0 gibi eski arayüzler sınırlı bant genişliği nedeniyle ölçeklenebilirliği kısıtlar.

3. Yazılım ve İşleme Boru Hattı

Yazılım verimliliği, bu iki alanın sistem performansı için anahtar olduğu kadar kritiktir.

3.1 İşletim Sistemi ve Yazılım Araçları

İşletim sistemi ve sürücüleri yazılım temelini oluşturur. Gerçek zamanlı işletim sistemleri (RTOS) gecikmeyi en aza indirir ve robotik kontrol gibi uygulamalar için idealdir. Açık kaynak desteği nedeniyle Linux tabanlı sistemler popülerdir. İyileştirilmiş sürücüler donanım performansını artırır. OpenCV, MATLAB ve TensorFlow veya PyTorch gibi derin öğrenme çerçeveleri gibi görsel yazılımlar ve kitaplıklar, hesaplama verimliliklerinde farklılık gösterir. Örneğin, temel kenar algılama kullanan bir konak makinesi, daha yüksek karmaşıklık nedeniyle GPU-iyileştirilmiş YOLO modelini çalıştıran bir makinadan daha fazla kamerayı destekleyebilir.

3.2 Çoklu İplikleme ve İyileştirme

Etkin çoklu iş parçacığı ve paralelleştirme, sistem performansını maksimize etmek için anahtar unsurlardır. Çoklu iş parçacığı (multithreading), görevlerin CPU çekirdeplerinde eşzamanlı olarak çalışmasını sağlarken, paralelleştirme GPU'ları veri işleme için kullanır. OpenMP ve CUDA gibi teknolojiler, uygulama için çerçeveler sunar. Çok kamera temelli bir gözetim sisteminde, OpenMP kamera akışlarının işleme dağıtımını CPU çekirdeklere göre yapabilir ve CUDA görüntü analizini GPU üzerinde hızlandırabilir, daha fazla kameranın işlenmesine olanak tanır.

4. Uygulama Gereksinimleri

Görüş görevinin karmaşıklığı, kaynakların nasıl ayrılacağını belirler; anlık işleme ve işlem karmaşıklığı ana belirleyicilere örnektir.

4.1 Anlık (Real-Time) vs. Çevrimdışı İşleme

Otonom sürüş ve endüstriyel otomasyon gibi anlık uygulamalar, gecikmeli olmayan işleme ihtiyaç duyar ve bu da bir konak cihazının destekleyebileceği kamera sayısını sınırlar. Çevrimdışı işleme, toplu video analizi gibi, daha fazla kamerayı işleyebilir ancak sonuçlar geciktirilmiştir.

4.2 İşlem Karmaşıklığı

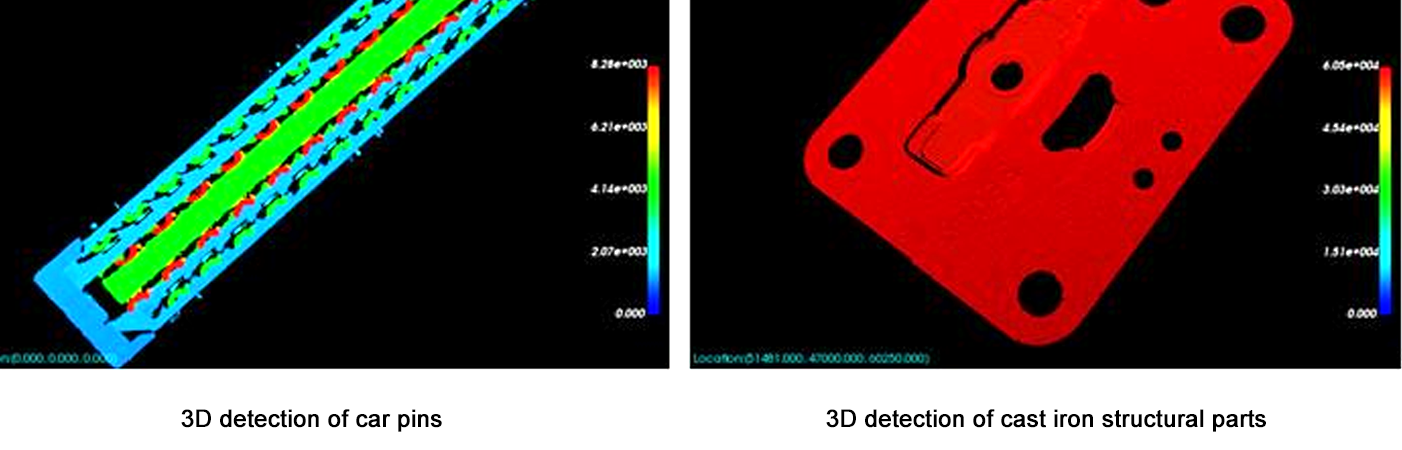

Hareket algılama gibi basit görevler düşük hesaplama yükü ortaya koyar, bu da bir ana cihazın daha fazla kamerayı desteklemesine izin verir. 3B yeniden oluşturma veya gelişmiş yüz tanıma gibi karmaşık görevler önemli kaynaklara ihtiyaç duyar ve bu da desteklenen kamera sayısını azaltır. Örneğin, hareket algılama için 10 kamerayı destekleyebilecek bir ana cihaz, gerçek zamanlı 3B derinlik tahmini için sadece 3 kamerayı destekleyebilir.

5. Tahmin Çerçevesi

Kamera kapasitesini tahmin etmek için aşağıdaki adımları kullanın:

Kamera Parametrelerini Tanımlayın: Çözünürlük, çerçeve oranı, sıkıştırma ve arayüz.

Veri Akışını Hesaplayın: Sıkıştırılmamış Veri Oranı = Çözünürlük × Çerçeve Oranı × Bit Derinliği / 8 (örneğin, 30 FPS'de 1080p = 1920×1080×30×24 / 8 = ~1.4 GB/s).

Donanım Sınırlamalarını Değerlendirin: CPU/GPU işleme gücü ≥ toplam veri akışı × işleme ek maliyet faktörü (karmaşık görevler için 2–5×).

Prototiplerle Test Etme: Tek bir kamera için kaynak kullanımını ölçmek üzere kıyaslama araçlarını (örneğin, Intel VTune, NVIDIA Nsight) kullanın, ardından paralelleştirme kazanç/kayıplarına göre doğrusal olarak ölçeklendirin.

Sonuç

Bir ana bilgisayarın görsel bir sisteminde destekleyebileceği kamera sayısının sabit bir sayı olmadığı, donanım yetenekleri, kamera spesifikasyonları, yazılım iyileştirmesi ve görev karmaşıklığı arasında bir denge olduğu gerçeğini anlamak önemlidir. Çoğu sistem için, kaynak kullanımını izlerken prototiple başlamak ve yavaş yavaş ölçeği genişletmek en güvenilir yaklaşımdır. Donanım (örneğin, daha hızlı GPUS, AI hızlandırıcıları) ve yazılım (örneğin, edge hesaplama çerçeveleri) hızlı bir şekilde gelişmeye devam ederken, daha fazla kamerayı daha yüksek performansla destekleme kapasitesi de artmaya devam edecektir. Bu evrim, daha sofistike ve ölçeklenebilir görsel çözümlerin geliştirilmesini sağlayacak ve sağlık hizmetleri, ulaşım, güvenlik ve eğlence gibi çeşitli endüstrilerde yeni olanaklar açacaktır.

Bu makale, sistem mimarları ve mühendisleri için temel bir anlayış sağlar, uygulama gereksinimlerine uygun olabilmek için özelleştirilmiş testlerin ve iyileştirmenin gerekliliğini vurgular. Tüm ilgili faktörleri dikkate alarak, hem verimli hem de modern uygulamaların artan taleplerini karşılayabilecek görsel sistemler tasarlanabilir.