Why Is It Difficult for Machine Vision to Achieve High-Precision Dimension Inspection?

In the rapidly evolving landscape of industrial automation and quality control, machine vision has emerged as a powerful tool for various inspection tasks. However, despite its many advantages, achieving high-precision dimension inspection remains a significant challenge. This article delves into the key reasons behind the difficulty of attaining accurate dimensional measurements through machine vision.

Hardware - Related Constraints

The hardware components of a machine vision system, including cameras and lenses, impose inherent limitations on precision. Cameras with lower resolution are unable to capture fine details of objects, leading to inaccurate dimension calculations. Even with high - resolution cameras, the pixel size is a crucial factor. Smaller pixels can theoretically provide more detailed images, but they also reduce the amount of light captured per pixel, increasing image noise. This noise can distort the edges of objects, making it hard to precisely define their boundaries.

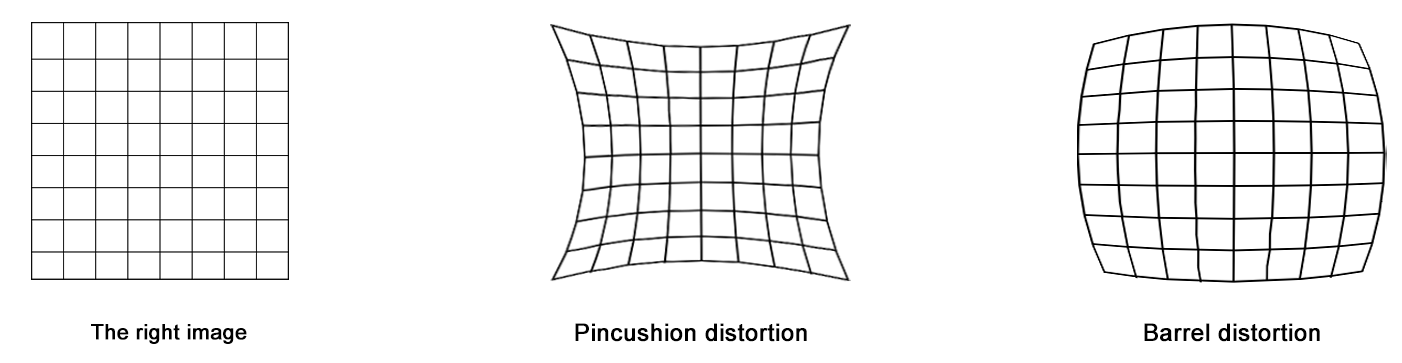

Industrial lenses also play a critical role in the accuracy of machine vision. Geometric distortions, such as barrel and pincushion distortion, are common in lenses. These distortions cause straight lines in the real world to appear curved in the captured images, which can significantly affect the accuracy of dimension measurement. Additionally, lenses may suffer from chromatic aberration, where different wavelengths of light are focused at different points, resulting in color fringing around objects and further degrading measurement precision. Correcting these lens imperfections requires complex calibration procedures, and achieving perfect correction across the entire field of view is extremely difficult.

Physical Limitations of Optics

The physical principles of optics present fundamental barriers to high - precision dimension inspection in machine vision. Light diffraction is a major issue. According to the laws of optics, when light passes through small openings or around small objects, it diffracts, causing the edges of the object's image to blur. In the case of inspecting small components, this diffraction effect can make it impossible to distinguish between closely - spaced features accurately, leading to errors in dimension measurement.

Another optical limitation is the limited depth of field. In machine vision, if the object has complex three - dimensional shapes or if there are variations in the object's position relative to the camera, parts of the object may be out of focus. This out - of - focus blur can distort the object's appearance, making it difficult to measure dimensions precisely. Adjusting the depth of field often involves trade - offs; increasing the depth of field may reduce the resolution, while increasing the resolution may narrow the depth of field.

Environmental Interference

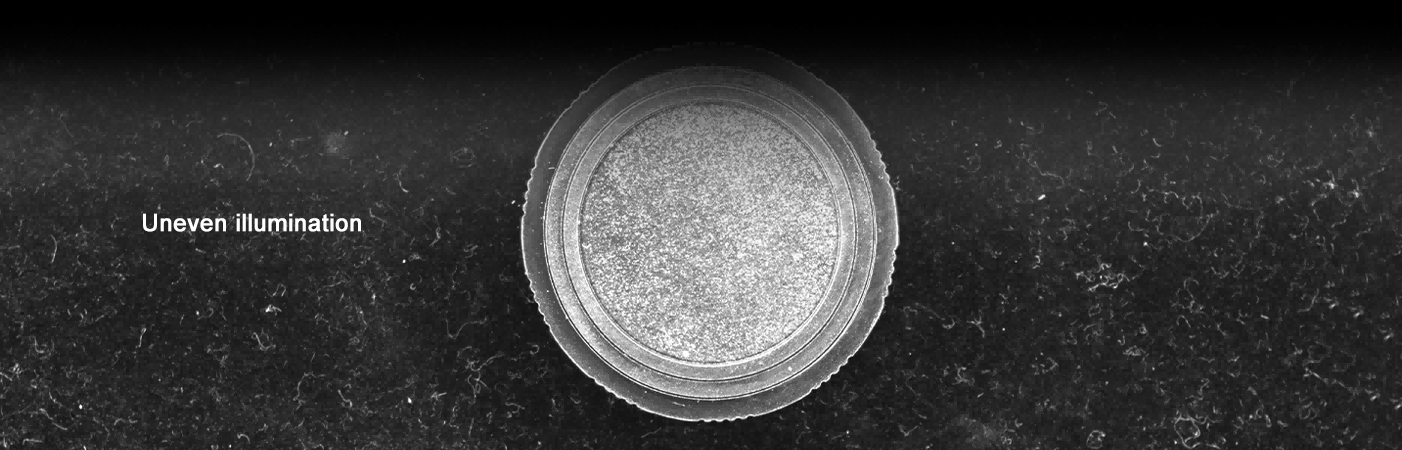

The environment in which machine vision systems operate can have a profound impact on the accuracy of dimension inspection. Lighting conditions are highly variable and critical. Changes in illumination intensity, direction, and color temperature can alter the appearance of objects in images. For example, uneven lighting can create shadows on the object, which may be misinterpreted as part of the object's shape, leading to incorrect dimension calculations. Reflective surfaces on the object can also cause glare, which can saturate the camera sensor and obscure important features.

Ambient temperature and humidity can also affect the performance of machine vision systems. Temperature changes can cause thermal expansion or contraction of both the object being inspected and the hardware components of the vision system, leading to dimensional changes. Humidity can cause condensation on lenses or other optical components, degrading image quality and measurement accuracy.

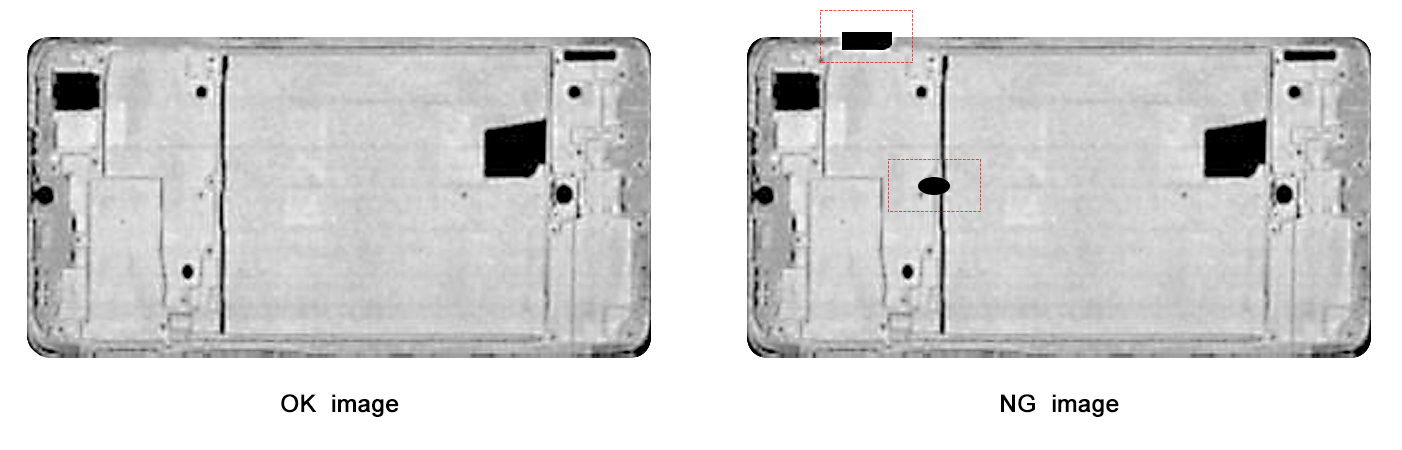

Object Flatness - Induced Challenges

The flatness of an object is an often - overlooked yet significant factor that hampers the accuracy of machine vision - based dimension inspection. When an object's surface is uneven, the interaction between light and the object becomes unpredictable. In areas with bumps or depressions, light reflection deviates from the expected pattern. Instead of reflecting light in a consistent direction towards the camera, uneven surfaces scatter light, creating bright spots and shadows that do not correspond to the actual geometry of the object. These inconsistent lighting patterns can mislead edge - detection algorithms, causing them to incorrectly identify the boundaries of the object. For instance, a small protrusion on an otherwise flat surface might be mistaken for a distinct feature, resulting in inflated dimension measurements.

Moreover, in 3D machine vision systems that rely on techniques like structured light projection or stereo matching, an uneven surface disrupts the fundamental processes of depth perception. With structured light, the projected patterns get distorted on an irregular surface, making it challenging to accurately decode the depth information. In stereo vision, the variations in surface flatness can lead to errors in matching corresponding points between the two camera views, as the irregularities create disparities that do not reflect the true distances. As a result, reconstructing the object's 3D shape with high precision becomes a formidable task, directly impacting the accuracy of dimension inspection.

Algorithmic and Software Limitations

The algorithms and software used in machine vision for dimension inspection have their own set of challenges. Edge detection, a fundamental step in determining object dimensions, is often complex and error - prone. Different edge detection algorithms, such as Canny, Sobel, or Laplacian, have their own strengths and weaknesses. Noise in the image can cause false edges to be detected, while low - contrast objects may result in missed edges.

Furthermore, accurately fitting geometric models to the detected edges to calculate dimensions is a difficult task. Objects may have irregular shapes, surface defects, or variations in texture, which can confuse the algorithms. In addition, handling objects with complex three - dimensional geometries requires advanced 3D reconstruction algorithms, which are computationally expensive and often lack the necessary accuracy.

In conclusion, the difficulty of achieving high - precision dimension inspection with machine vision stems from a combination of hardware limitations, optical constraints, environmental interference, object flatness - related issues, and algorithmic and software challenges. Overcoming these obstacles requires continuous research and development in multiple fields, including optics, electronics, computer science, and materials science. By addressing these issues, we can improve the accuracy and reliability of machine vision systems for dimension inspection, enabling them to meet the increasingly stringent requirements of modern industrial applications.