Machine Vision Contour Detection

In the age of Industry 4.0 and smart automation, machine vision has become a core technology, letting machines “see” and interpret visual data with precision often exceeding human capabilities. Among its key functions, contour detection is critical: it extracts object boundary shapes from digital images, forming the basis for tasks like object recognition, dimension measurement, defect inspection, and robotic manipulation.

1. What is Contour Detection?

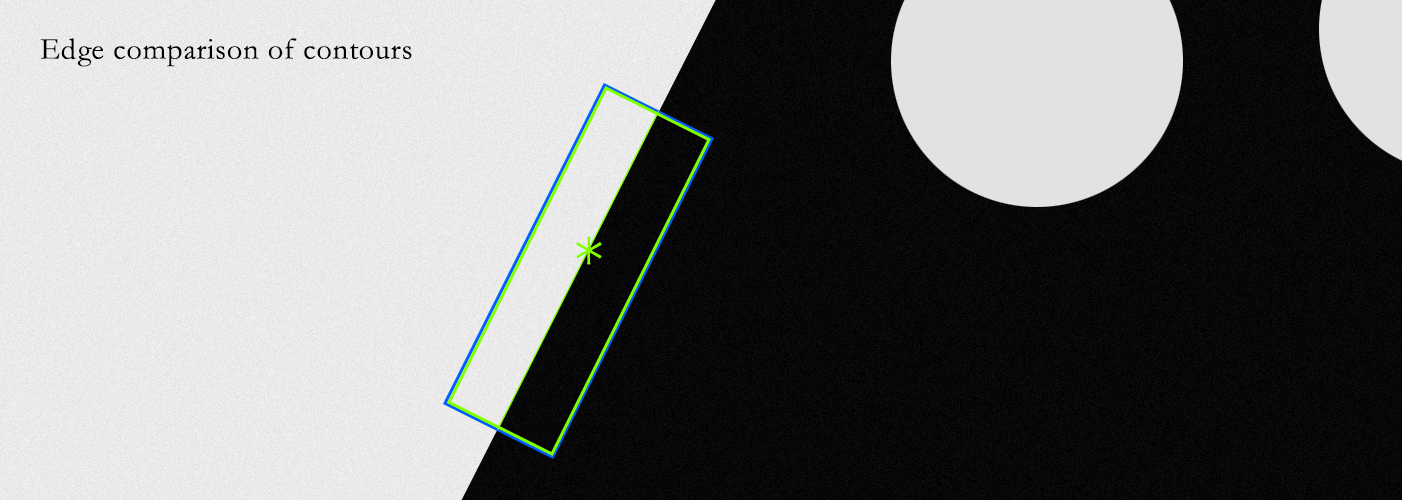

A “contour” in image processing is a curve connecting continuous points (along an object’s boundary) with the same intensity or color, separating the object from its background. Unlike discrete edges (pixel-level light-dark transitions), contours are continuous loops (or open curves for partial objects) that represent an object’s shape, not just isolated intensity changes.

The main goal of contour detection is to simplify image data: reducing a 2D image to 1D contour lines lets machines analyze an object’s geometry (size, angles, symmetry) efficiently, without processing every pixel—essential for real-time applications where speed and accuracy matter equally.

2. Core Principles

Contour detection relies on two key steps: preprocessing (enhancing object-background contrast) and contour extraction (identifying boundary points). These address raw image flaws like noise, uneven lighting, or low contrast that obscure boundaries.

2.1 Preprocessing

Raw images rarely have clear boundaries, so preprocessing is vital:

Grayscale Conversion: Most algorithms use single-channel grayscale images (simplifying data from three RGB channels to one, as color is often irrelevant).

Noise Reduction: Gaussian blurring smooths images with a Gaussian kernel, cutting high-frequency noise while preserving major intensity shifts—critical for avoiding false edges from sensor interference or lighting fluctuations.

Edge Detection: Identifies pixel-level intensity changes (edges) that build contours. The Canny Edge Detector (a multi-stage method: smoothing, gradient calculation, non-maximum suppression, hysteresis thresholding) is the gold standard, producing thin, continuous edges. The Sobel Operator, which highlights horizontal/vertical edges, works for thick boundaries.

Thresholding: Converts grayscale edge maps to binary (black/white) images, with foreground (object edges) as 1 and background as 0—simplifying contour tracing.

2.2 Contour Extraction

After preprocessing, algorithms trace connected foreground pixels to form contours. The Freeman Chain Code is widely used: it represents contours as direction codes (up, down, left, right) relative to the previous pixel, reducing storage and enabling easy shape comparison. Libraries like OpenCV simplify this with functions like findContours(), which returns contour pixel coordinates and allows filtering (by area or aspect ratio) to remove noise.

3. Advanced Techniques

Traditional methods work for controlled, high-contrast environments, but real-world scenarios (uneven lighting, overlapping objects) need advanced approaches:

Adaptive Thresholding: Calculates local thresholds for each pixel (vs. a single global threshold), ideal for images with varying lighting (e.g., industrial parts under factory lights).

Deep Learning-Based Detection: Convolutional Neural Networks (CNNs) extract boundaries directly from raw images, skipping manual preprocessing. Models like HED (Holistically-Nested Edge Detector) and RCF (Richer Convolutional Features) fuse multi-scale CNN features for high-resolution edge maps, excelling in complex scenes (medical images, cluttered environments).

4. Key Challenges

Despite progress, real-world hurdles remain:

Noise and Lighting: Factory floors, low light, or outdoor settings cause broken/false contours.

Overlapping/Occluded Objects: Stacked parts merge contours, making individual shapes hard to distinguish.

Transparent/Reflective Materials: Glass or metal scatters light, creating weak/distorted edges.

Real-Time Performance: Industrial tasks (assembly line inspection) need 30+ frames per second (FPS). Deep learning models require optimization (quantization, GPU acceleration) to meet speed demands.

5. Real-World Applications

Contour detection drives automation across industries:

Industrial Quality Inspection: Checks for defects (cracks, dents) in manufacturing. For example, automotive production verifies engine components (gears, gaskets) match design contours, rejecting off-tolerance parts.

Robotic Pick-and-Place: Helps robots locate objects. In warehouses, robotic arms use contours to find packages on conveyors, calculate center/orientation, and adjust grips.

Medical Imaging: Segments anatomical structures (tumors in CT scans, cell boundaries in histology slides) to aid diagnosis. Deep learning models handle variable biological tissues well.

Agriculture: Sorts fruits (apples, oranges) by size/ripeness via contour analysis and detects crop diseases from leaf contour changes.

Traffic Monitoring: Tracks vehicles, measures flow, or identifies accidents (via unusual contours or stationary objects) using surveillance cameras.

6. Future Trends

Three trends will shape contour detection:

Edge AI Integration: Lightweight models (quantized CNNs) on edge devices (industrial cameras, drones) enable real-time processing without cloud reliance—critical for autonomous robots.

Multi-Modal Fusion: Combining visual data with LiDAR/thermal imaging improves detection in tough conditions (e.g., thermal imaging enhances low-light boundaries; LiDAR adds 3D depth for overlapping objects).

Explainable AI (XAI): XAI techniques will clarify how deep learning models detect contours, building trust in critical fields (medical diagnosis, aerospace inspection).

Conclusion

Machine vision contour detection connects raw image data to actionable insights, powering automation and quality control. From traditional edge detection to deep learning, it has evolved to tackle complex challenges. As technology advances, it will remain central to smart systems, making machines more capable and reliable across industries.